Using Ray as a foundation for a real-time analytic monitoring framework

Ray’s actor model, and its simplicity of implementation in Python-based frameworks is a primary motivation for using it in a higher-level research framework (Realm, to be published) for real-time analytic monitoring of critical events (e.g. suicide risk, infectious diseases, etc.) that my colleagues and I are working on. The purpose of Realm is to provide: real time alerts in response to, for example, continuously occurring, high-frequency clinical events, support for online learning, distribution of model updates through the hierarchy of models (local/embedded, regional, and central), and targeted, selective, and context-specific updates to these models. While other frameworks provide most of these functions (Akka, Spark, Kafka, etc.), we are exploring and using Ray because of its simplicity of implementation (using actors…

Modern Parallel and Distributed Python: A Quick Tutorial on Ray

Ray is an open source project for parallel and distributed Python. This article was originally posted here. Parallel and distributed computing are a staple of modern applications. We need to leverage multiple cores or multiple machines to speed up applications or to run them at a large scale. The infrastructure for crawling the web and responding to search queries are not single-threaded programs running on someone’s laptop but rather collections of services that communicate and interact with one another. This post will describe how to use Ray to easily build applications that can scale from your laptop to a large cluster. Why Ray? Many tutorials explain how to use Python’s multiprocessing module. Unfortunately the multiprocessing module is severely limited in…

Ray: Application-level scheduling with custom resources

Application-level scheduling with custom resources New to Ray? Start Here! Ray intends to be a universal framework for a wide range of machine learning applications. This includes distributed training, machine learning inference, data processing, latency-sensitive applications, and throughput-oriented applications. Each of these applications has different, and, at times, conflicting requirements for resource management. Ray intends to cater to all of them, as the newly emerging microkernel for distributed machine learning. In order to achieve that kind of generality, Ray enables explicit developer control with respect to the task and actor placement by using custom resources. In this blog post we are going to talk about use cases and provide examples. This article is intended for readers already familiar with…

An Overview of the CALM Theorem

For folks who care about what’s possible in distributed computing: Peter Alvaro and I wrote an introduction to the CALM Theorem and subsequent work that is now up on arXiv. The CALM Theorem formally characterizes the class of programs that can achieve distributed consistency without the use of coordination. — Joe Hellerstein (Cross-posted from databeta.wordpress.com.) I spent a good fraction of my academic life in the last decade working on a deeper understanding of how to program the cloud and other large-scale distributed systems. I was enormously lucky to collaborate with and learn from amazing friends over this period in the BOOM project, and see our work picked up and extended by new friends and colleagues. Our research was motivated by…

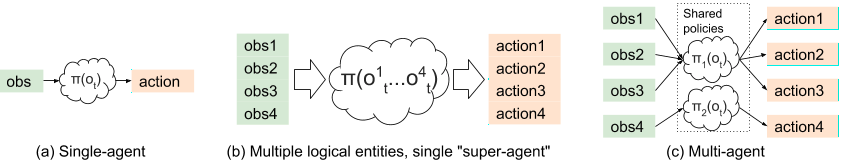

An Open Source Tool for Scaling Multi-Agent Reinforcement Learning

We just rolled out general support for multi-agent reinforcement learning in Ray RLlib 0.6.0. This blog post is a brief tutorial on multi-agent RL and how we designed for it in RLlib. Our goal is to enable multi-agent RL across a range of use cases, from leveraging existing single-agent algorithms to training with custom algorithms at large scale.

Going Fast and Cheap: How We Made Anna Autoscale

Background: In an earlier blog post, we described a system called Anna, which used a shared-nothing, thread-per-core architecture to achieve lightning-fast speeds by avoiding all coordination mechanisms. Anna also used lattice composition to enable a rich variety of coordination-free consistency levels. The first version of Anna blew existing in-memory KVSes out of the water: Anna is up to 700x faster than Masstree, an earlier state-of-the-art research KVS, and up to 800x faster than Intel’s “lock-free” TBB hash table. You can find the previous blog post here and the full paper here. We refer to that version of Anna as “Anna v0.” In this post, we describe how we extended the fastest KVS in the cloud to be extremely cost-efficient and…

Exploratory data analysis of genomic datasets using ADAM and Mango with Apache Spark on Amazon EMR (AWS Big Data Blog Repost)

Note: This blogpost is replicated from the AWS Big Data Blog and can be found here. As the cost of genomic sequencing has rapidly decreased, the amount of publicly available genomic data has soared over the past couple of years. New cohorts and studies have produced massive datasets consisting of over 100,000 individuals. Simultaneously, these datasets have been processed to extract genetic variation across populations, producing mass amounts of variation data for each cohort. In this era of big data, tools like Apache Spark have provided a user-friendly platform for batch processing of large datasets. However, to use such tools as a sufficient replacement to current bioinformatics pipelines, we need more accessible and comprehensive APIs for processing genomic data. We…

Implementing A Parameter Server in 15 Lines of Python with Ray

This blog post was originally posted here. View the code on Gist.

Distributed Policy Optimizers for Scalable and Reproducible Deep RL

In this blog post we introduce Ray RLlib, an RL execution toolkit built on the Ray distributed execution framework. RLlib implements a collection of distributed policy optimizers that make it easy to use a variety of training strategies with existing reinforcement learning algorithms written in frameworks such as PyTorch, TensorFlow, and Theano. This enables complex architectures for RL training (e.g., Ape-X, IMPALA), to be implemented once and reused many times across different RL algorithms and libraries. We discuss in more detail the design and performance of policy optimizers in the RLlib paper. What’s next for RLlib In the near term we plan to continue building out RLlib’s set of policy optimizers and algorithms. Our aim is for RLlib to serve…

Anna: A Crazy Fast, Super-Scalable, Flexibly Consistent KVS 🗺

This article cross-posted from the DataBeta blog. There’s fast and there’s fast. This post is about Anna, a key/value database design from our team at Berkeley that’s got phenomenal speed and buttery smooth scaling, with an unprecedented range of consistency guarantees. Details are in our upcoming ICDE18 paper on Anna. Conventional wisdom (or at least Jeff Dean wisdom) says that you have to redesign your system every time you scale by 10x. As researchers, we asked the counter-cultural question: what would it take to build a key-value store that would excel across many orders of magnitude of scale, from a single multicore box to the global cloud? Turns out this kind of curiosity can lead to a system with pretty interesting practical…