Ray is an open source project for parallel and distributed Python. This article was originally posted here. Parallel and distributed computing are a staple of modern applications. We need to leverage multiple cores or multiple machines to speed up applications or to run them at a large scale. The infrastructure for crawling the web and responding to search queries are not single-threaded programs running on someone’s laptop but rather collections of services that communicate and interact with one another. This post will describe how to use Ray to easily build applications that can scale from your laptop to a large cluster. Why Ray? Many tutorials explain how to use Python’s multiprocessing module. Unfortunately the multiprocessing module is severely limited in…

Scaling Interactive Pandas Workflows with Modin – Talk at PyData NYC 2018

In this talk, we will present Modin, a middle layer for DataFrames and interactive data science. Modin, formerly Pandas on Ray, is a library that allows users to speed up their Pandas workflows by changing a single line of code. During the presentation, we will discuss interesting ways Modin is being used, and show how we improve the performance of the most popular Pandas operations. Modin is an early-stage project at UC Berkeley’s RISELab designed to facilitate the use of distributed computing for Data Science. Often, a challenge encountered when trying to use tools for large-scale data is that there is a significant learning overhead. Modin is designed to expose a set of familiar APIs (Pandas, SQL, etc.) and internally…

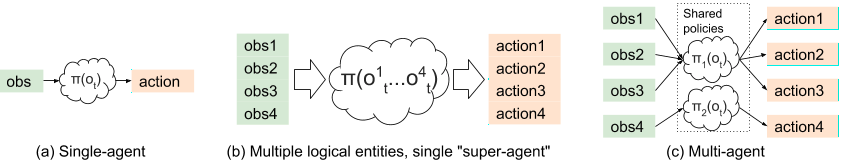

An Open Source Tool for Scaling Multi-Agent Reinforcement Learning

We just rolled out general support for multi-agent reinforcement learning in Ray RLlib 0.6.0. This blog post is a brief tutorial on multi-agent RL and how we designed for it in RLlib. Our goal is to enable multi-agent RL across a range of use cases, from leveraging existing single-agent algorithms to training with custom algorithms at large scale.

Modin (Pandas on Ray) – October 2018

View the code on Gist.

Implementing A Parameter Server in 15 Lines of Python with Ray

This blog post was originally posted here. View the code on Gist.

Pandas on Ray – Early Lessons from Parallelizing Pandas

View the code on Gist.

Distributed Policy Optimizers for Scalable and Reproducible Deep RL

In this blog post we introduce Ray RLlib, an RL execution toolkit built on the Ray distributed execution framework. RLlib implements a collection of distributed policy optimizers that make it easy to use a variety of training strategies with existing reinforcement learning algorithms written in frameworks such as PyTorch, TensorFlow, and Theano. This enables complex architectures for RL training (e.g., Ape-X, IMPALA), to be implemented once and reused many times across different RL algorithms and libraries. We discuss in more detail the design and performance of policy optimizers in the RLlib paper. What’s next for RLlib In the near term we plan to continue building out RLlib’s set of policy optimizers and algorithms. Our aim is for RLlib to serve…

Pandas on Ray

View the code on Gist.