The following paper from the RISELab has won the “Notable Paper Award” at the 2019 Artificial Intelligence and Statistics (AISTATS) conference: A Swiss Army Infinitesimal Jackknife; Giordano, R., Stephenson, W., Liu, R., Jordan, M. I. & Broderick, T. (2019); In K. Chaudhuri and M. Sugiyama (Eds.), Proceedings of the Twenty-Second Conference on Artificial Intelligence and Statistics (AISTATS), Okinawa, Japan.

An Open Source Tool for Scaling Multi-Agent Reinforcement Learning

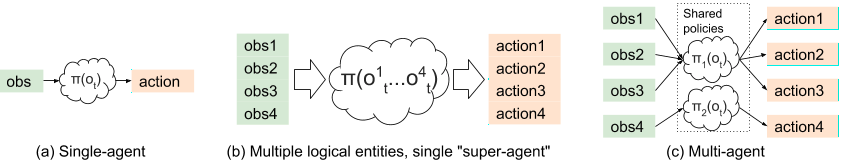

We just rolled out general support for multi-agent reinforcement learning in Ray RLlib 0.6.0. This blog post is a brief tutorial on multi-agent RL and how we designed for it in RLlib. Our goal is to enable multi-agent RL across a range of use cases, from leveraging existing single-agent algorithms to training with custom algorithms at large scale.

SQL Query Optimization Meets Deep Reinforcement Learning

We show that deep reinforcement learning is successful at optimizing SQL joins, a problem studied for decades in the database community. Further, on large joins, we show that this technique executes up to 10x faster than classical dynamic programs and 10,000x faster than exhaustive enumeration. This blog post introduces the problem and summarizes our key technique; details can be found in our latest preprint, Learning to Optimize Join Queries With Deep Reinforcement Learning. SQL query optimization has been studied in the database community for almost 40 years, dating all the way back from System R’s classical dynamic programming approach. Central to query optimization is the problem of join ordering. Despite the problem’s rich history, there is still a continuous stream…

MLPerf: SPEC for ML

The RISE Lab at UC Berkeley today joins Baidu, Google, Harvard University, and Stanford University to announce a new benchmark suite for machine learning called MLPerf at the O’Reilly AI conference in New York City (see https://mlperf.org/). The MLPerf effort aims to build a common set of benchmarks that enables the machine learning (ML) field to measure system performance eventually for both training and inference from mobile devices to cloud services. We believe that a widely accepted benchmark suite will benefit the entire community, including researchers, developers, builders of machine learning frameworks, cloud service providers, hardware manufacturers, application providers, and end users. Historical Inspiration. We are motivated in part by the System Performance Evaluation Cooperative (SPEC) benchmark for general-purpose computing that drove rapid,…

Distributed Policy Optimizers for Scalable and Reproducible Deep RL

In this blog post we introduce Ray RLlib, an RL execution toolkit built on the Ray distributed execution framework. RLlib implements a collection of distributed policy optimizers that make it easy to use a variety of training strategies with existing reinforcement learning algorithms written in frameworks such as PyTorch, TensorFlow, and Theano. This enables complex architectures for RL training (e.g., Ape-X, IMPALA), to be implemented once and reused many times across different RL algorithms and libraries. We discuss in more detail the design and performance of policy optimizers in the RLlib paper. What’s next for RLlib In the near term we plan to continue building out RLlib’s set of policy optimizers and algorithms. Our aim is for RLlib to serve…

Reinforcement Learning brings together RISELab and Berkeley DeepDrive for a joint mini-retreat

On May 2, RISELab and the Berkeley DeepDrive (BDD) lab held a joint, largely student-driven mini-retreat. The event was aimed at exploring research opportunities at the intersection of the BDD and RISE labs. The topical focus of the mini-retreat was emerging AI applications, such as Reinforcement Learning (RL), and computer systems to support such applications. Trevor Darrell kicked off the event with an introduction to the Berkeley DeepDrive lab, followed by Ion Stoica’s overview of RISE. The event offered a great opportunity for researchers from both labs to exchange ideas about their ongoing research activity and discover points of collaboration. Philipp Moritz started the first student talk session with an update on Ray — a distributed execution framework for emerging…