Ray’s actor model, and its simplicity of implementation in Python-based frameworks is a primary motivation for using it in a higher-level research framework (Realm, to be published) for real-time analytic monitoring of critical events (e.g. suicide risk, infectious diseases, etc.) that my colleagues and I are working on. The purpose of Realm is to provide: real time alerts in response to, for example, continuously occurring, high-frequency clinical events, support for online learning, distribution of model updates through the hierarchy of models (local/embedded, regional, and central), and targeted, selective, and context-specific updates to these models. While other frameworks provide most of these functions (Akka, Spark, Kafka, etc.), we are exploring and using Ray because of its simplicity of implementation (using actors…

Ray: Application-level scheduling with custom resources

Application-level scheduling with custom resources New to Ray? Start Here! Ray intends to be a universal framework for a wide range of machine learning applications. This includes distributed training, machine learning inference, data processing, latency-sensitive applications, and throughput-oriented applications. Each of these applications has different, and, at times, conflicting requirements for resource management. Ray intends to cater to all of them, as the newly emerging microkernel for distributed machine learning. In order to achieve that kind of generality, Ray enables explicit developer control with respect to the task and actor placement by using custom resources. In this blog post we are going to talk about use cases and provide examples. This article is intended for readers already familiar with…

An Open Source Tool for Scaling Multi-Agent Reinforcement Learning

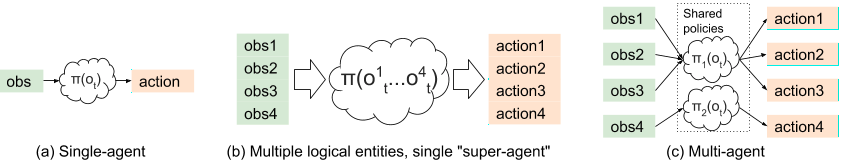

We just rolled out general support for multi-agent reinforcement learning in Ray RLlib 0.6.0. This blog post is a brief tutorial on multi-agent RL and how we designed for it in RLlib. Our goal is to enable multi-agent RL across a range of use cases, from leveraging existing single-agent algorithms to training with custom algorithms at large scale.

Implementing A Parameter Server in 15 Lines of Python with Ray

This blog post was originally posted here. View the code on Gist.

Distributed Policy Optimizers for Scalable and Reproducible Deep RL

In this blog post we introduce Ray RLlib, an RL execution toolkit built on the Ray distributed execution framework. RLlib implements a collection of distributed policy optimizers that make it easy to use a variety of training strategies with existing reinforcement learning algorithms written in frameworks such as PyTorch, TensorFlow, and Theano. This enables complex architectures for RL training (e.g., Ape-X, IMPALA), to be implemented once and reused many times across different RL algorithms and libraries. We discuss in more detail the design and performance of policy optimizers in the RLlib paper. What’s next for RLlib In the near term we plan to continue building out RLlib’s set of policy optimizers and algorithms. Our aim is for RLlib to serve…

Fast Python Serialization with Ray and Apache Arrow

This post was originally posted here. Robert Nishihara and Philipp Moritz are graduate students in the RISElab at UC Berkeley. This post elaborates on the integration between Ray and Apache Arrow. The main problem this addresses is data serialization. From Wikipedia, serialization is … the process of translating data structures or object state into a format that can be stored … or transmitted … and reconstructed later (possibly in a different computer environment). Why is any translation necessary? Well, when you create a Python object, it may have pointers to other Python objects, and these objects are all allocated in different regions of memory, and all of this has to make sense when unpacked by another process on another machine. Serialization and deserialization…

RISELab Announces 3 Open Source Releases

Part of the Berkeley tradition—and the RISELab mission—is to release open source software as part of our research agenda. Six months after launching the lab, we’re excited to announce initial v0.1 releases of three RISElab open-source systems: Clipper, Ground and Ray. Clipper is an open-source prediction-serving system. Clipper simplifies deploying models from a wide range of machine learning frameworks by exposing a common REST interface and automatically ensuring low-latency and high-throughput predictions. In the 0.1 release, we focused on reliable support for serving models trained in Spark and Scikit-Learn. In the next release we will be introducing support for TensorFlow and Caffe2 as well as online-personalization and multi-armed bandits. We are providing active support for early users and will be following Github issues…