The mission of the RISELab is to develop technologies that enable applications to make low-latency decisions on live data with strong security. One of the first steps towards achieving this goal is to study techniques to evaluate machine learning models and quickly render predictions. This missing piece of machine learning infrastructure, the prediction serving system, is critical to delivering real-time and intelligent applications and services.

As we studied the prediction-serving problem, two key challenges emerged. The first challenge is supporting the stringent performance demands of interactive serving workloads. As machine learning models improve they are increasingly being applied in business critical settings and user-facing interactive applications. This requires models to render predictions that can meet the strict latency requirements of interactive applications while scaling to bursty user-driven workloads. The second challenge is how to manage the large and growing ecosystem of machine learning models and frameworks, each with its own set of strengths and weaknesses. While the data science ecosystem provides a large toolbox to solve a diverse set of problems, different frameworks are written in different programming languages, require different hardware to run (such as CPUs vs GPUs), and can have orders of magnitude different computational costs. A general purpose prediction serving system must be flexible enough to serve models trained in any of these frameworks.

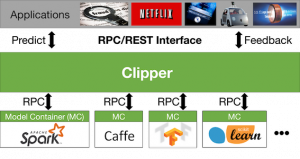

To address these challenges, we built Clipper, a general purpose, low-latency prediction serving system. Clipper sits in between frontend serving applications that query Clipper and machine learning frameworks that render predictions. By interposing between models and frontend applications, Clipper can meet the stringent performance demands of interactive applications while retaining the flexibility of serving models trained in any machine learning framework, and even serve models trained in different frameworks at the same time.

Clipper uses three key techniques to address the model serving challenges.

Clipper is a distributed serving system that runs in Docker containers, and each model deployed to Clipper runs in separate container that communicates with Clipper via a uniform RPC interface. This distributed architecture allows models to be deployed to Clipper with the original code and systems used during training, simplifying deployment and reducing error prone conversions from training to deployment frameworks. Containerized model deployment isolates models from each other which prevents a bug or failure in one model from inducing failures in the rest of the system. Finally, the distributed architecture allows Clipper to scale-out individual models to address the varied computational costs under heavy load.

Second, Clipper performs cross framework caching and batching to optimize throughput and latency. Clipper adaptively batches queries to each model, enabling the underlying frameworks to more effectively leverage parallel acceleration (e.g., math libraries, multi-threading, and GPUs). To maximize system performance while achieving strict latency goals, Clipper employs an adaptive latency-aware batching strategy to optimally tune the batch size on a per-model basis.

Finally, Clipper can perform cross-framework model composition to improve prediction accuracy. By using machine learning techniques like ensemble models and multi-armed bandit methods, Clipper can select and combine predictions from multiple models, even models trained in different machine learning frameworks, to provide more accurate and robust predictions. You can learn more about Clipper’s architecture and how it uses each of these techniques in our NSDI 2017 paper.

We are actively developing Clipper as an open-source prediction serving system intended for real use in production settings. We released the alpha version of Clipper in May with a focus on simplicity and stability. For example, you can start Clipper and and deploy Python and Spark models with just a few lines of Python. We’re working hard on the next release which will be in early August. This release will add support for deploying Clipper on Kubernetes and continue to simplify model deployment for more common frameworks like R, TensorFlow, and Keras. You can learn how to get started using Clipper at http://clipper.ai or check out the code and start contributing to the project on Github.